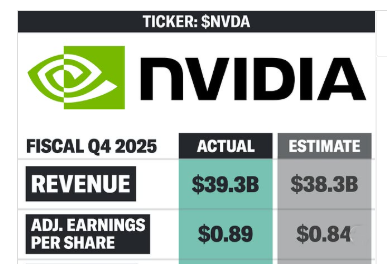

Financial markets have been subtly getting ready for Nvidia’s fiscal Q4 2025 earnings for the past few weeks. This financial statement, which does more than just report results—it also lays out the company’s plans for the future of artificial intelligence. Nvidia announced record quarterly revenue of $39.3 billion on April 22. This is not only an impressive jump, but it also serves as an economic symbol of how deeply AI is permeating contemporary industry.

Nvidia’s full-year revenue increased by 114% to $130.5 billion, indicating that its trajectory is no longer merely speculative. The company is evolving from a chip supplier to an orchestrator of digital ecosystems, and it is currently the beating heart of AI hardware infrastructure. The ever-forward-thinking CEO Jensen Huang highlighted a new generation of compute-hungry intelligence systems driven by Nvidia’s next-generation Blackwell AI chips when he characterized this growth as being driven by “agentic and reasoning AI.”

Nvidia Corporation 2025 Financial Summary

| Metric | Value (FY25/Q4) |

|---|---|

| Total Q4 Revenue | $39.3 billion (↑12% QoQ, ↑78% YoY) |

| Full-Year Revenue (FY25) | $130.5 billion (↑114% YoY) |

| Net Income (Q4) | $22.1 billion (↑80% YoY) |

| Earnings Per Share (GAAP Q4) | $0.89 per share (↑82% YoY) |

| Gross Margin (Q4) | 73.0% (↓3.0 points YoY) |

| Data Center Revenue (Q4) | $35.6 billion (↑93% YoY) |

| Blackwell Sales Impact | Billions booked in first quarter post-ramp |

| Q1 FY26 Revenue Guidance | $43 billion (midpoint), implies 65% YoY growth |

| Dividend Payment Date | April 2, 2025 ($0.01/share) |

| Official Source | NVIDIA Investor Relations |

Why Despite Slowing Growth Velocity, Investors Are Holding Steady

Although the 78% year-over-year growth in Q4 is unquestionably strong, Nvidia’s guidance for Q1 FY26—an estimated $43 billion in revenue—is more telling. This represents a slower but still impressive 65% year-over-year increase. That figure would indicate a breakthrough year for the majority of businesses. However, the slowdown is remarkably similar to the normal maturation of a tech leader at scale for Nvidia, whose sales tripled in the fourth quarter of fiscal 2024.

The market isn’t cringing, though. Why? because Nvidia’s impact has outlasted the hardware demand’s cyclical peaks. The next generation of long-context AI reasoning, an area that is expanding more quickly than most people expected, is being powered by its GPUs, especially the Blackwell architecture. Through partnerships with robotics companies, research labs, and hyperscalers, Nvidia is laying the groundwork for an industrial era driven by artificial intelligence.

The Chip That Could Revolutionize Agentic AI: Blackwell

Blackwell might maintain the AI boom that ChatGPT started. Blackwell chips are already bringing in billions of dollars in bookings, according to Nvidia’s CFO Colette Kress, demonstrating that the company’s shift from “training models” to “thinking models” is not only technically ambitious but also commercially validated. These chips are especially inventive because they allow for longer, more complex sequences of inference, conversation, and even reasoning in addition to increasing computation.

This architecture affects a wide range of industries, including financial forecasting, logistics, autonomous navigation, and genomics, in addition to powering datacenters. Nvidia is simplifying systems’ ability to think beyond static prompts by creating chips that prioritize memory flow, context retention, and AI modularity. This will enable smarter outcomes with fewer computational detours.

Operational discipline and marginal pressure

Due to the rising cost of increasingly sophisticated AI chips and data infrastructure, the gross margin in Q4 decreased to 73%, which was marginally lower than the 76% margin in the previous year. Nvidia is still incredibly efficient, though, as evidenced by its $24 billion operating income and 9% quarterly increase in operating expenses.

Nvidia exhibits its capacity to strike a balance between innovation and profit by continuing to maintain lean operating ratios even as demand increases. Even though its dividend is small at $0.01 per share, it conveys continuity and stability, which are attributes that investors are increasingly valuing in times of macroeconomic uncertainty.

How the Dominance of Nvidia Influences the broader tech ecosystem

Zacks recently revealed that, compared to previous averages, only 73% of S&P 500 companies exceeded EPS expectations. In contrast, Nvidia’s performance significantly improved on all benchmarks, solidifying its standing as the leading indicator of next-generation computing. Nvidia controls the undercurrent—the silicon, the systems, and increasingly the strategy—while Alphabet and Microsoft dominate the cloud AI services market.

Nvidia is evolving from a supplier of parts to an AI platform by fusing hardware with proprietary software tools like CUDA and TensorRT. Similar to Apple’s early iPhone days, this type of vertical ownership involves defining product categories while maintaining control over the resources that others use to innovate.

From Cognitive Infrastructure to Compute

Nvidia’s infrastructure will be the engine room as generative models develop into agentic intelligence—systems that can think, act, and learn on their own—powering not just responses but entire environments, workflows, and simulations.

Jensen Huang’s long-term strategy, in which intelligence becomes ubiquitous and computation grows exponentially, may propel Nvidia beyond chips and into end-to-end AI ecosystems in the years to come, encompassing everything from embodied robotics to enterprise automation.